This story was published in partnership with the Verge.

Introduction

Aerospace engineer Mike Griffin says he is taking the threat of drone swarms — including those that could be driven by artificial intelligence — seriously. So is his employer, the U.S. Department of Defense, as are top officials in the Air Force.

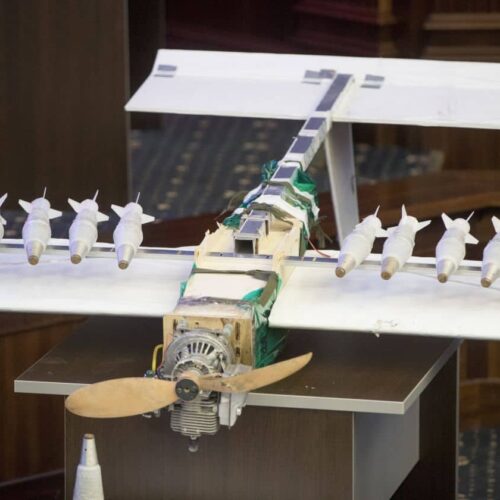

The threat is no longer theoretical. During an April 9 Washington, D.C., conference on the future of war sponsored by the nonprofit think-tank New America, Griffin, a former space program director who in February became the under secretary of defense for research and engineering, noted that a small swarm of drones in January attacked a Russian air base in Syria.

“Certainly human-directed weapons systems can deal with one or two or a few drones if they see them coming, but can they deal with 103?” Griffin asked. “If they can deal with 103, which I doubt, can they deal with 1,000?”

Griffin’s comments came during a conversation about how to cope with drones and other weapons being outfitted with artificial intelligence, in which he argued for more earnest effort on both offensive and defensive weapons. “In an advanced society,” he said, “AI and cyber and some of these other newer realms offer possibilities to our adversaries to [target others successfully] and we must see to it that we cannot be surprised.”

Erick Gibson/New America Foundation

He called for more serious work by the Pentagon, saying “there might be an artificial intelligence arms race, but we are not yet in it.” America’s adversaries, he said, “understand very well the possible utility of machine learning. I think it’s time we did as well.”

His endorsement explained, in part, an expansive proposed buildup of the Defense Department’s own drone force. A report from the Center for the Study of the Drone at Bard College Monday said the Defense Department’s proposed 2019 budget for unmanned systems and technologies includes a 25 percent increase over 2018 funding, which would allow it to reach $9.39 billion. That proposal includes funding for 3,447 new air, ground and sea drones. In 2018, the budget called for only 807 new drones, according to the report.

But the offensive side of drone technology is farther along than the defensive side — particularly when swarms are at issue, Griffin suggested. “There’s no provable, optimal scheme” for defending against such swarms, Griffin said, and so the Pentagon might, as a result, have to build only “a pretty good scheme” — because otherwise the enemy will have an uncontested shot at succeeding.

Some technology experts are nervous about the accelerating drive towards weapons systems that use AI to make key attack decisions. In Geneva this week, representatives of 120 United Nations member countries began discussing a potential ban on lethal AI-infused weaponry at a forum organized by a United Nations group, known as the Convention on Conventional Weapons Group of Governmental Experts on Lethal Autonomous Weapons Systems (CCW).

The U.S. must “commit to negotiate a legally-binding ban treaty without delay to draw the boundaries of future autonomy in weapon systems,” Mary Wareham, advocacy director of Human Rights Watch’s Arms Division, told the CCW. Such a treaty should “prevent the development, production and use of fully autonomous weapons.”

Separately, last week, thousands of Google employees wrote a letter protesting the tech giant’s involvement in the Pentagon’s “pathfinder” AI program, known as Project Maven — a mission run by the Office of the Secretary of Defense to process video from tactical drones “in support of the Defeat-ISIS campaign,” according to an April 2017 memorandum by Deputy Secretary of Defense Robert O. Work that created the project.

Budget documents describe Project Maven as a program developing AI, machine learning, and computer vision algorithms to “detect, classify, and track objects” seen in full-motion video captured by drones and other tools of “Intelligence, Surveillance, and Reconnaissance” — essentially a method of picking out terrorists that could be targeted in drone missile attacks.

While the program is currently meant to help analyze downloaded video streams, the Pentagon wants eventually to fit the sensors themselves with the analytical gear — potentially giving the machines the capability to make decisions for themselves, in real time. The Pentagon also wants to “support the rapid expansion of AI to other mission areas,” the budget documents state.

The overall Pentagon “algorithmic warfare” effort is supposed to get tens of millions of dollars of new funding in Trump’s military budget for fiscal year 2018. The Pentagon has requested more than $93 million specifically for Project Maven in fiscal 2019, the documents state; that’s up from $70 million in 2017, according to a Pentagon spokeswoman.

These are among the reasons that Google employees expressed concern. “We believe that Google should not be in the business of war,” read the letter, addressed to Google’s chief executive, Sundar Pichai, requesting that the company end its work on the project. “Building this technology to assist the US Government in military surveillance — and potentially lethal outcomes — is not acceptable,” their letter said.

When asked about the Google controversy at the New America conference, Gen. Stephen W. Wilson, the Air Force vice chief of staff, downplayed the Google employees’ concerns and said the program’s only problem is that it hasn’t been explained carefully enough. “To set the record straight, what we’re doing with Project Maven is we’re trying to take into consideration, into the routine, the processing of pictures,” he said.

“You may have an intel analyst and, after some period of training, he or she can get it right 75 percent of the time,” Wilson explained. “Average computers can do 1,000 pictures per minute with 99 percent accuracy. So how can I leverage this human-machine team and let computers do what computers are good at, and let humans do what humans are good at, and then figure out the insights and the why and the analysis rather than competitive tasks?

“This is where artificial intelligence is heading,” he added.

Wilson suggested he too is an AI enthusiast. “I think it’s going to take all of us working together across academia, across industry, across the departments, across all the national labs … and bringing them together in this competition with AI because that’s, in fact, what China’s doing.”

Tate Nurkin, a defense and security analyst with IHS Aerospace, a London-based financial research firm, also sounded an alarm at the conference about China’s massive state-run AI research program. He said that China’s advances in AI could “fundamentally change the competition with the U.S.” and mentioned a swarm of 1,180 drones that the Chinese company EHang showed off in December at the Global Fortune Forum in Guangzhou. The country has said it wants to become the global leader in AI by 2030.

Read more in National Security

Future of Warfare

The world may soon be awash in advanced, lethal drones

A report for the Joint Chiefs of Staff warns that rapidly proliferating large military drones will give enemies vastly more data and firepower

Future of Warfare

Trump administration accelerates military study of artificial intelligence

Experts at the RAND Corporation warn that potential dangers loom

Join the conversation

Show Comments